Context-Aware Augmented Reality for Cognitive Assistance in EMS

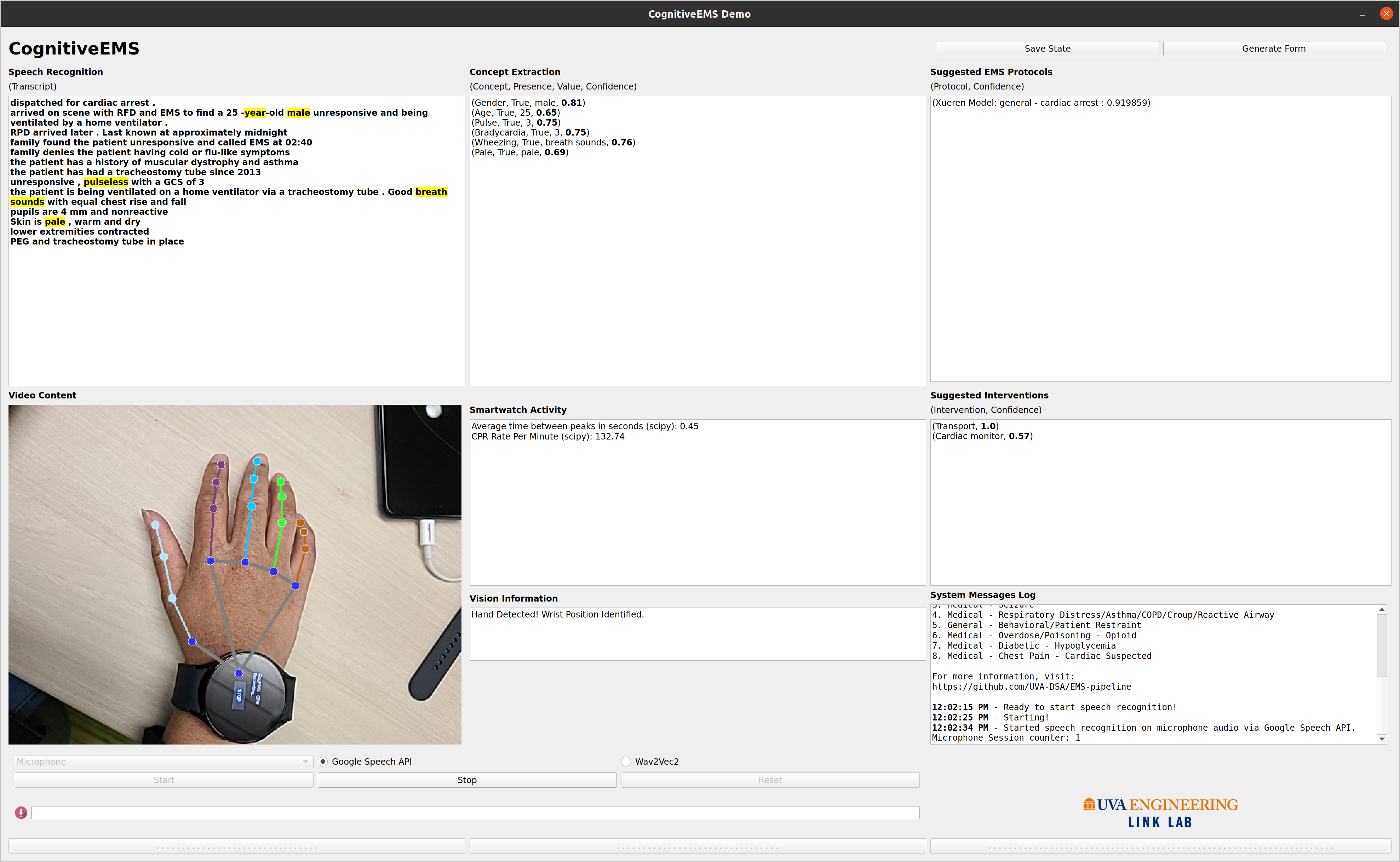

The main objective of this project is to develop an AR cognitive assistant system that supports the responders by real-time inference of incident context based on observations at the scene and provides them with context-dependent feedback on timely and safe execution of response actions. The proposed system will integrate AR interfaces (with audio and video measurement capabilities) with responder-worn sensors and data analytics algorithms (for speech recognition, natural language processing (NLP), image/video classification, and activity recognition) to perform data fusion and context inference based on multimodal sensor data from multiple responders on the scene.

We will combine the knowledge of EMS protocol guidelines and pre-collected incident data with machine learning methods to develop a computational framework for risk-aware decision support that captures the behavioral models of EMS protocols and requirements for the safe execution of interventions. The proposed framework will be used for real-time tracking of timing and quality of response actions and providing just-in-time smart reminders which are customized to each responder.

Emergency medical first responders and firefighters have access to substantial amount of data at the incident scene:

- Observations and communications with the center and other responders

- Sensor data from wearables, mobile, and IoT devices

- Physiological data from patient monitors and medical devices

Challenges in analysis of emergency response Data:

- Making sense of substantial amount of information

- Inaccuracies and incompleteness of manually collected data

- Cognitive overload

- Significant human cognitive effort required for manual interpretation and logging of data at incident scene, which could be better used to assess effective response actions.

- Resiliency

- Disconnections due to poor network connectivity or equipment faults may compromise first responder decision making and response.